LOEWE CSC Cluster Frankfurt

Cluster Access

Goethe Universität Frankfurt am Main

The new cluster Goethe-HLR is online! LOEWE cluster is switched off.

The cluster is reserved for projects from academic facilities in Hessen. For accessing the LOEWE CSC please refer the Web page of the Goethe-university of Frankfurt.

Typical Node Parameters

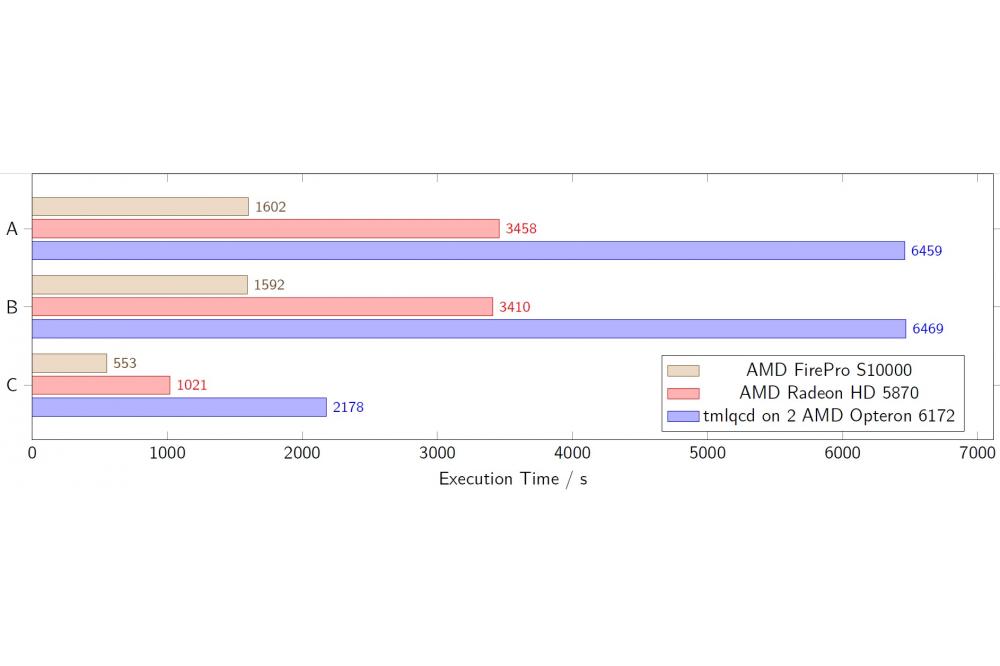

2x AMD FirePro S10000

1.5 TFLOPS (DP) each

2x AMD FirePro S10000

1.5 TFLOPS (DP) each

Global Cluster Parameters

runtime: max. 30d