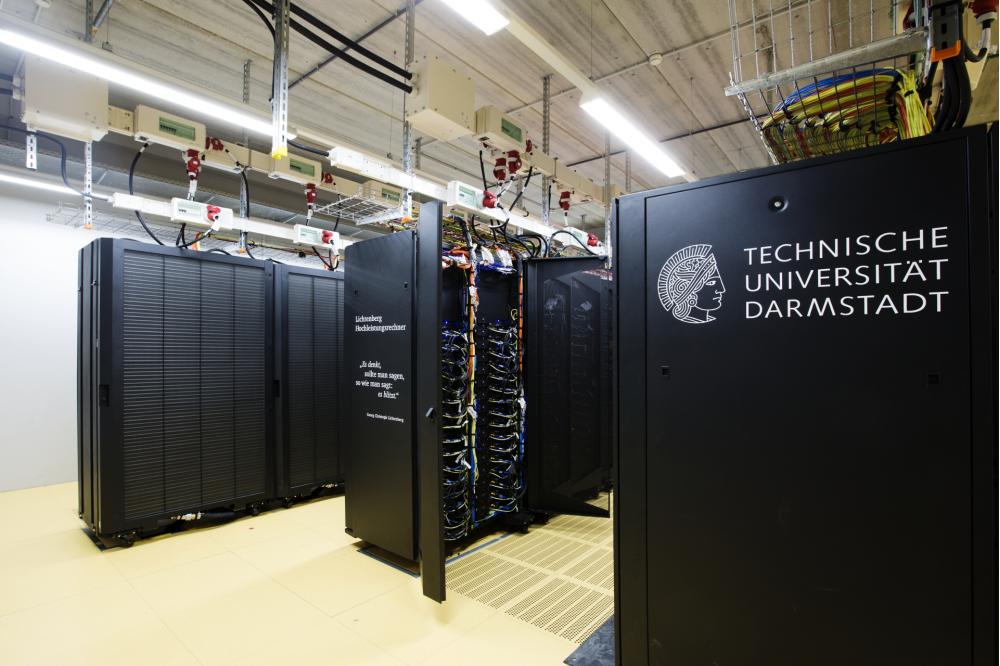

Lichtenberg Cluster Darmstadt

Cluster Access

Technische Universität Darmstadt

The Lichtenberg Cluster is situated in Darmstadt. It is a tier 2 cluster. Most of the processors are CPU, some accelerators (NVIDIA) are also available.

Note: Phase I of Lichtenberg (Node Type A) is deactivated as of April 2020.

Cluster Introductions:

- Monthly consultation hours for project proposals, in general every first Wednesday a month.

- Monthly introductory courses to the Lichtenberg Cluster, in general every second Tuesday a month.

The cluster is open to researchers from academia and public research facilities in Germany. Access is subject to a scientific project evaluation to the conditions of the steering committee.

Typical Node Parameters

43 à 2x NVIDIA K20Xm

1.3 TFLOPS, 6GB each

2 à 2x NVIDIA K40m, 1 à 2x NVIDIA K80

1.4 TFLOPS, 12 GB each

Global Cluster Parameters

runtime: 24h, max. 7d