Deep Implicit Collision Probability Fields

Introduction

In robotic applications such as autonomous driving and whole-body control, ensuring safety is of utmost importance. However, accounting for the uncertainty associated with objects that robots interact with poses a significant challenge. Existing methods either rely on computationally expensive online Monte Carlo sampling or make simplifying assumptions that result in suboptimal behavior. To address these limitations, we propose a new approach called Regularised Deep Collision Probability Fields (ReDCPF), a deep learning model for approximating collision probability fields for articulated objects that takes the uncertainty about the obstacles configuration into account. The Dataset for training the model is generated using Monte Carlo sampling which necessitates the use of a HPC.

Methods

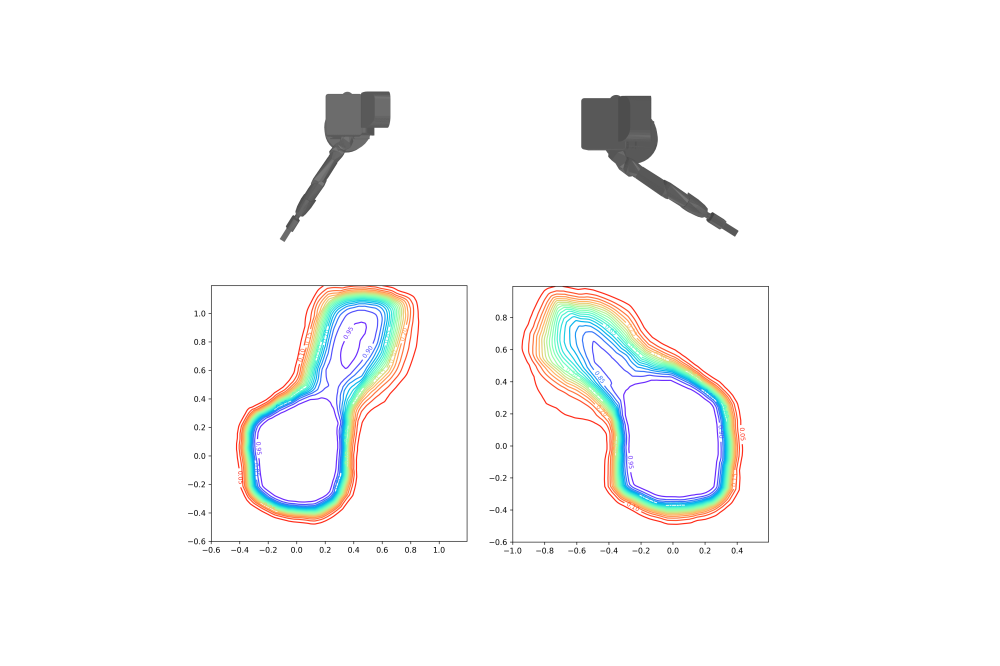

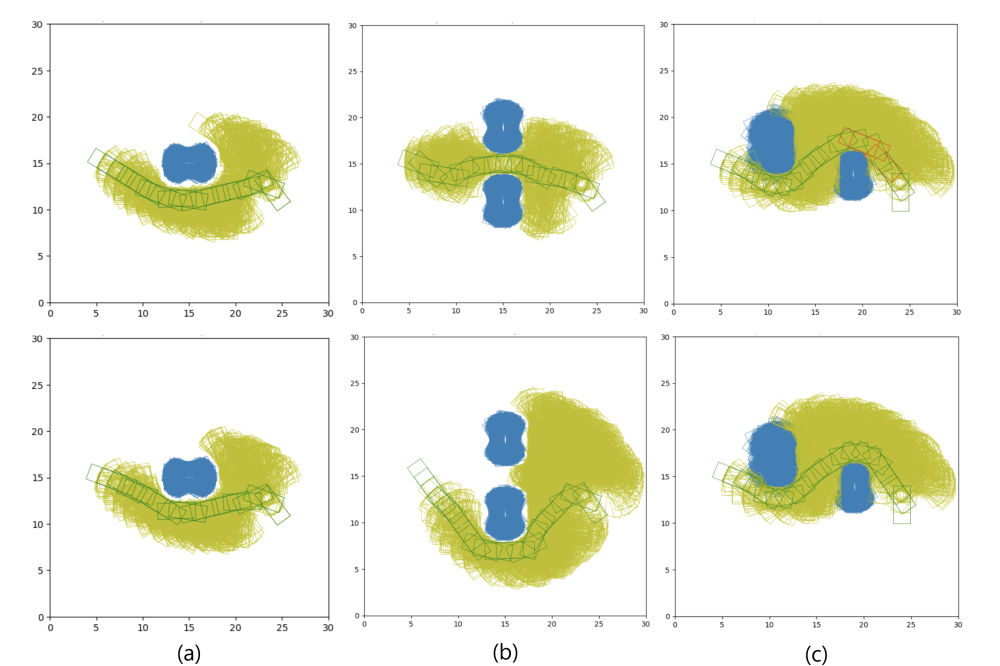

For autonomous vehicles, a top-down view with 2D rectangles was used to represent the vehicle shapes, reducing the dimensionality of configurable parameters. Robot positions were sampled from a ring-shaped distribution around the target object, focusing on accurate collision probabilities near the constraint region. GPU-accelerated Monte Carlo estimation using the separating axis theorem allowed efficient dataset generation. In the humanoid robot scenario configurations for joints were uniformly sampled, and Monte Carlo sampling was performed on a set of sparse rays shot outside from the center the object, reducing the dataset size while still capturing collision probability characteristics. The collision check for each sample was performed using narrow-phase collision checking algorithm from the flexible collision library.

Results

In the autonomous vehicle case, a sufficiently large and balanced dataset could be generated, which was then used to train the ReDCPF model. The dataset generation process was able to provide the necessary data for training, allowing the model to learn and approximate collision probabilities effectively. However, in the humanoid robot scenario, generating the 3D dataset proved to be significantly more time-consuming, as the more complex task could not be accelerated with a GPU. It took approximately 3-4 orders of magnitude longer compared to the autonomous vehicle case. Due to the substantial time required, it was not feasible to generate a dataset with multiple variances within a reasonable timeframe.

Discussion

The limitations of the dataset generation approach became evident in the high-dimensional humanoid robot scenario. The extensive computational demands hindered the generation of a diverse dataset with different variances, thereby limiting the training possibilities for the ReDCPF model in this specific case. Overall, the results demonstrate the successful generation of a suitable dataset for the autonomous vehicle scenario, while highlighting the challenges and limitations faced in generating a comprehensive dataset for the humanoid robot scenario due to the significant increase in computational requirements.