Projects

Hessian scientists of various disciplines are using High Performance Computers for their research.

Hessian scientists of various disciplines are using High Performance Computers for their research.

Displaying 1 - 22 of 22

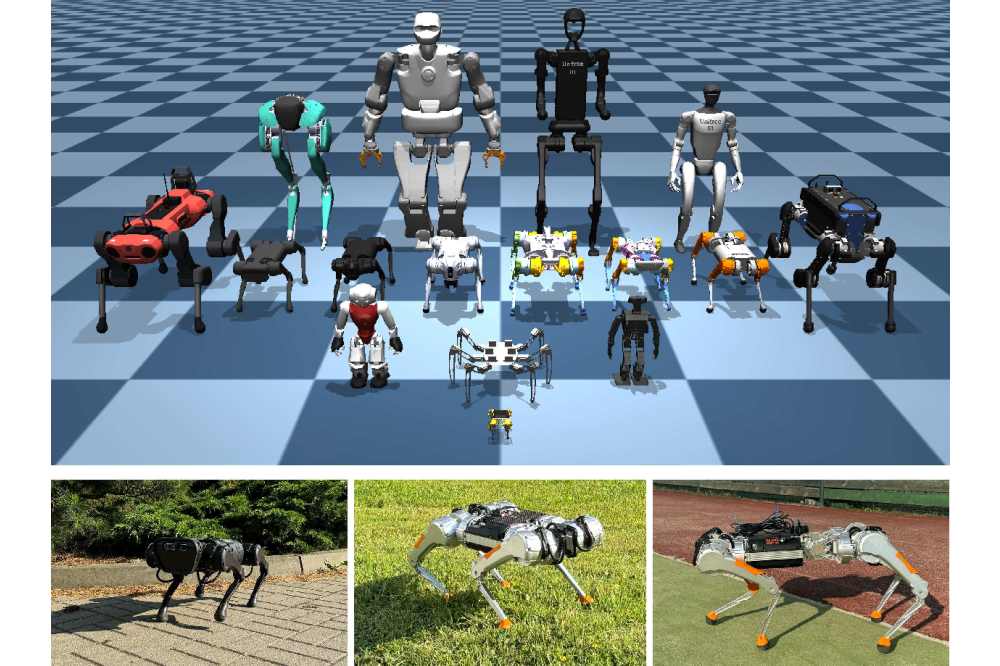

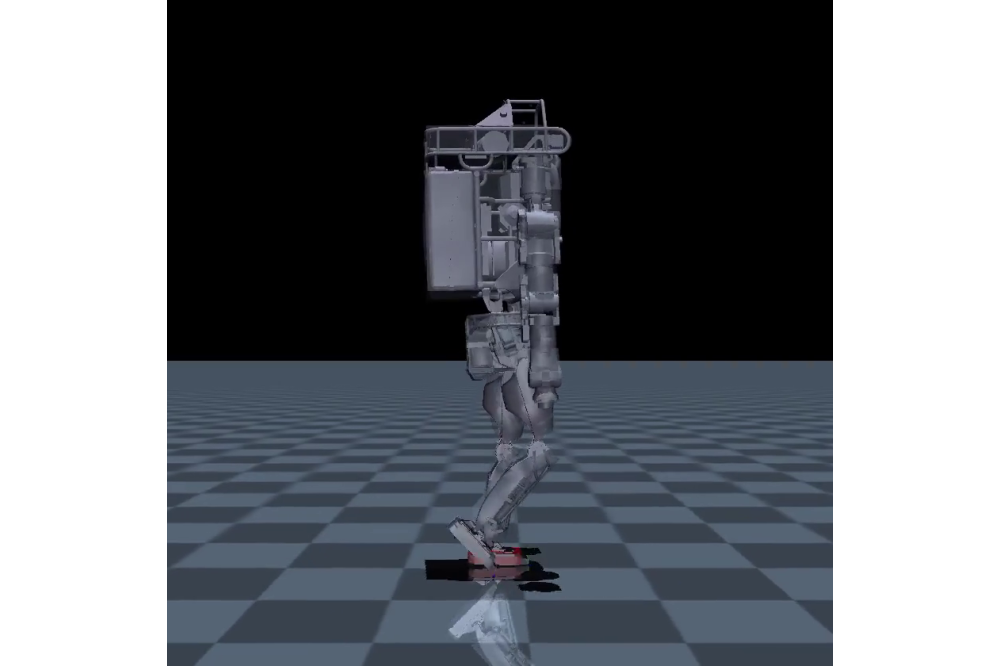

The rapid development of robotics has enabled legged robots capable of performing highly dynamic tasks such as walking ...

This work aimed to apply deep learning, specifically Mamba, to the problem of codon optimisation in order to be able to ...

Variational inference with Gaussian mixture models (GMMs) can be used to learn highly tractable approximations of ...

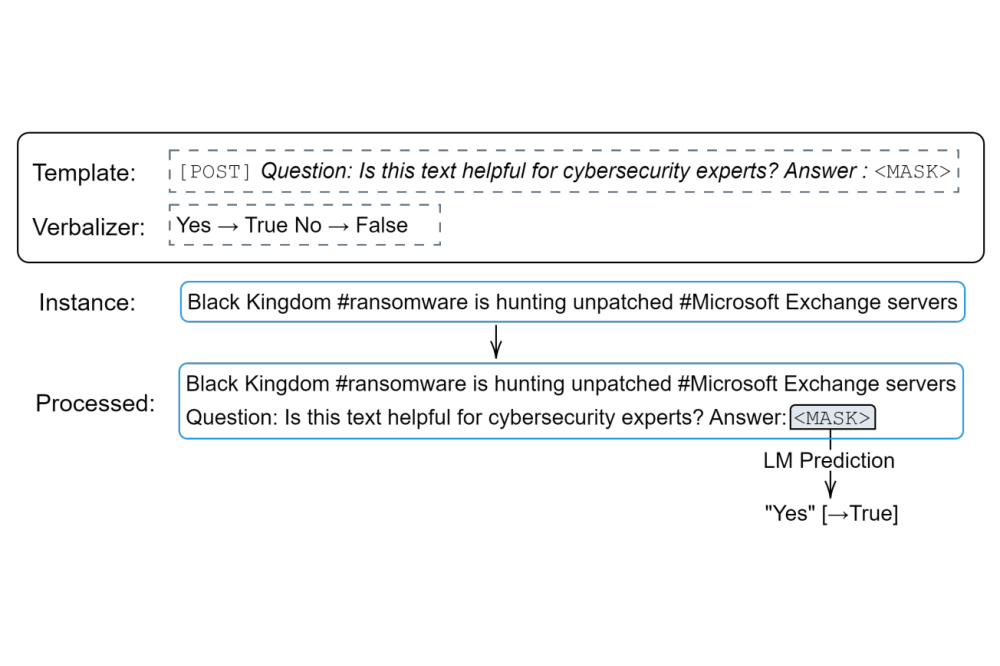

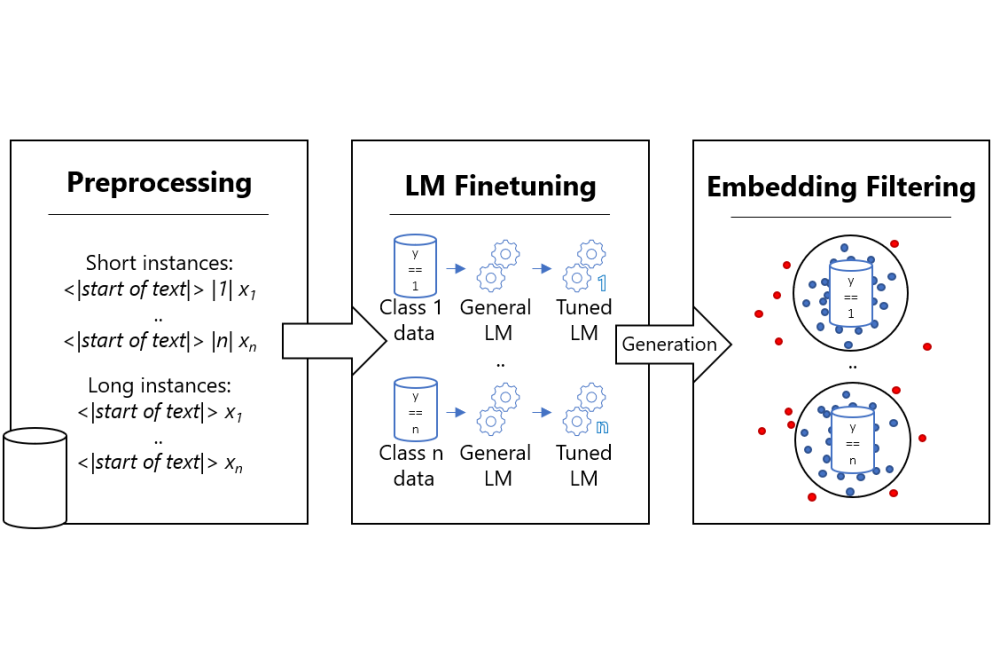

The increase in complex cyber-attacks illustrates the vulnerability of society and information infrastructure. In ...

Robotics platforms can massively benefit from novel Deep Reinforcement Learning approaches. However, robotics have ...

Artificial intelligence is currently developing faster than ever and introduces many different possibilities. Our client ...

Many problems in machine learning involve inference from intractable distributions. For example, when learning latent ...

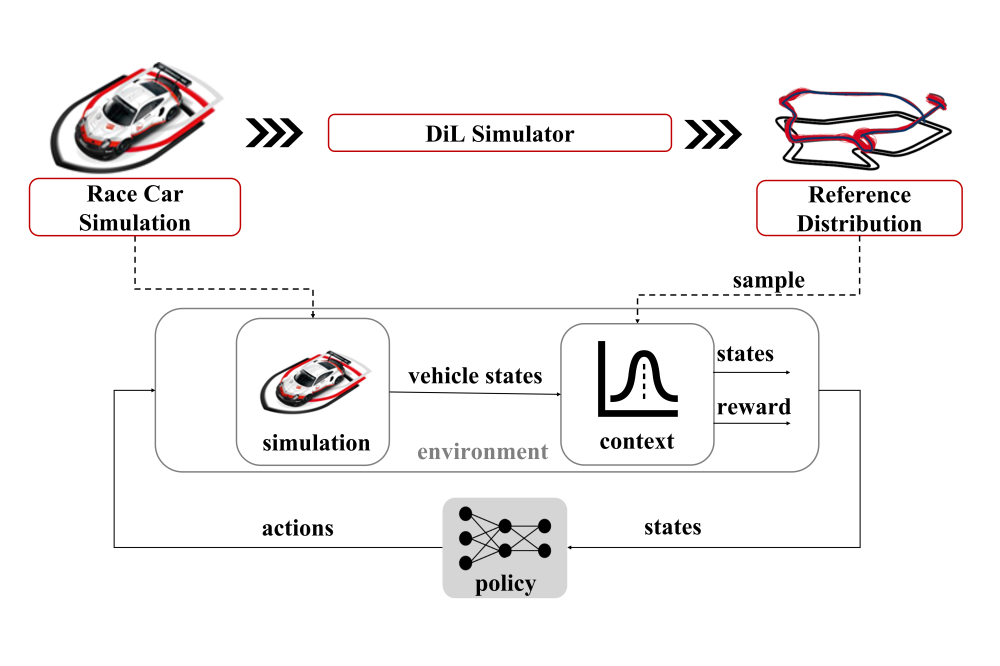

In order to facilitate rapid prototyping and testing in the advanced motorsport industry, we consider the problem of ...

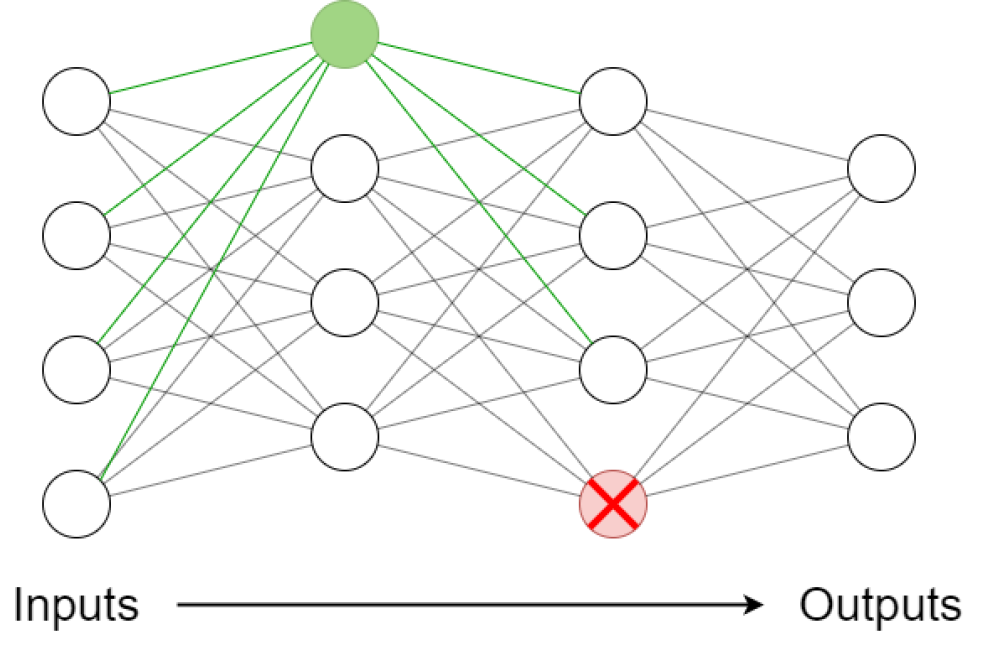

Neural networks are usually trained with a static architecture. However, the fields of growing and pruning, or ...

The increase in complex cyber-attacks illustrates the vulnerability of society and information infrastructure. In ...

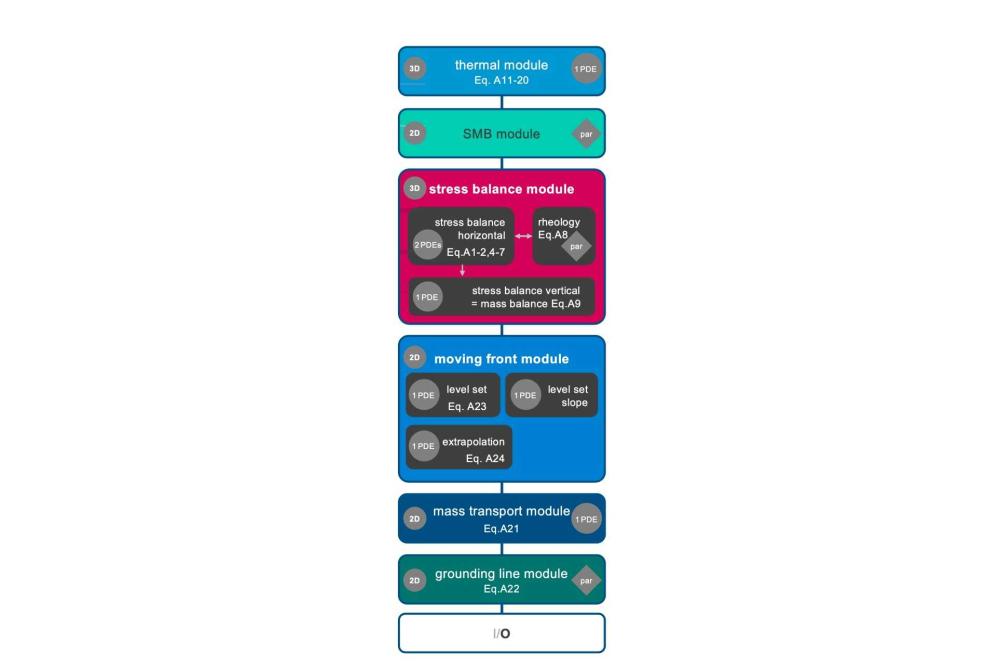

Earth modeling is a wide field of different scientific domains, e.g., ice-modeling. All domains have in common, that ...

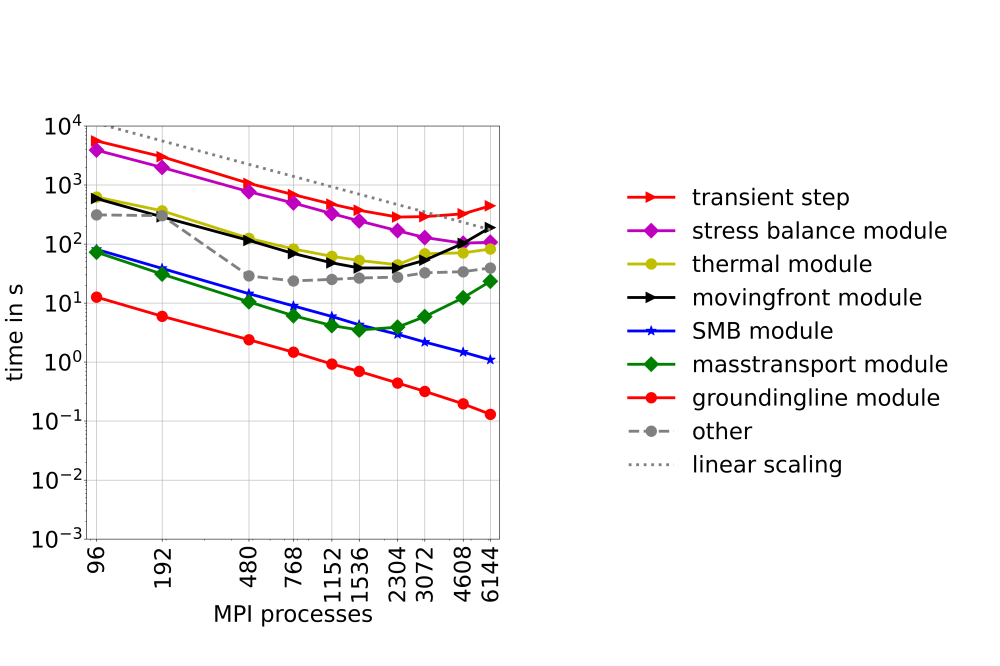

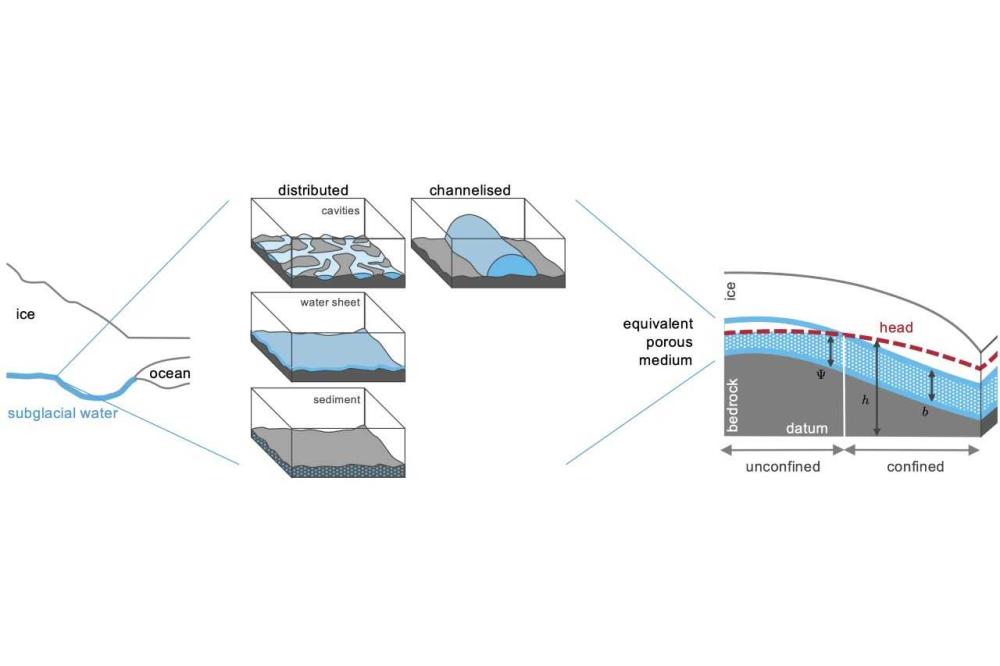

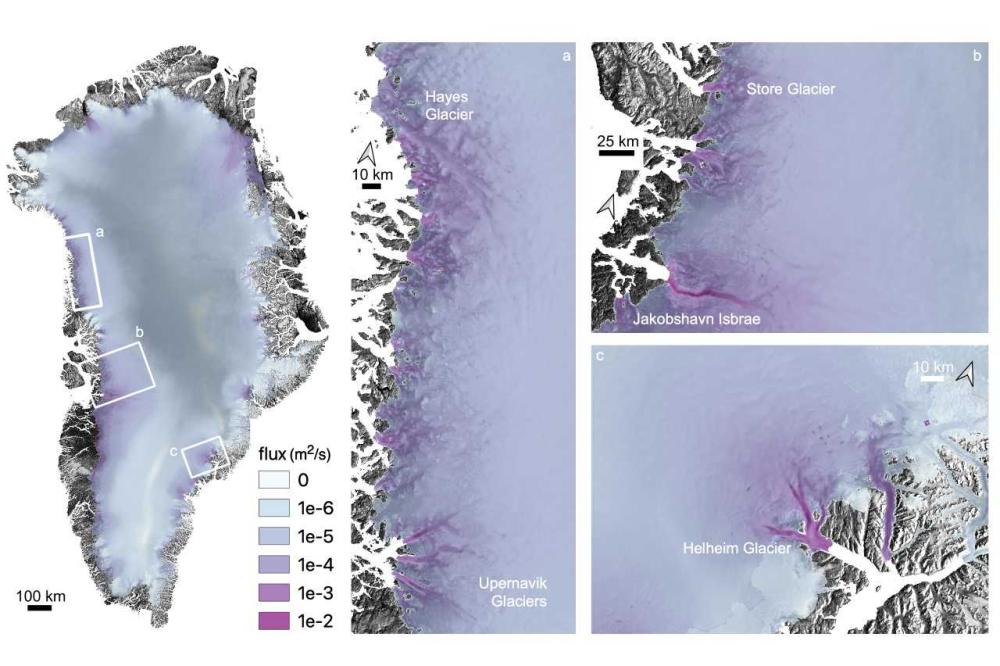

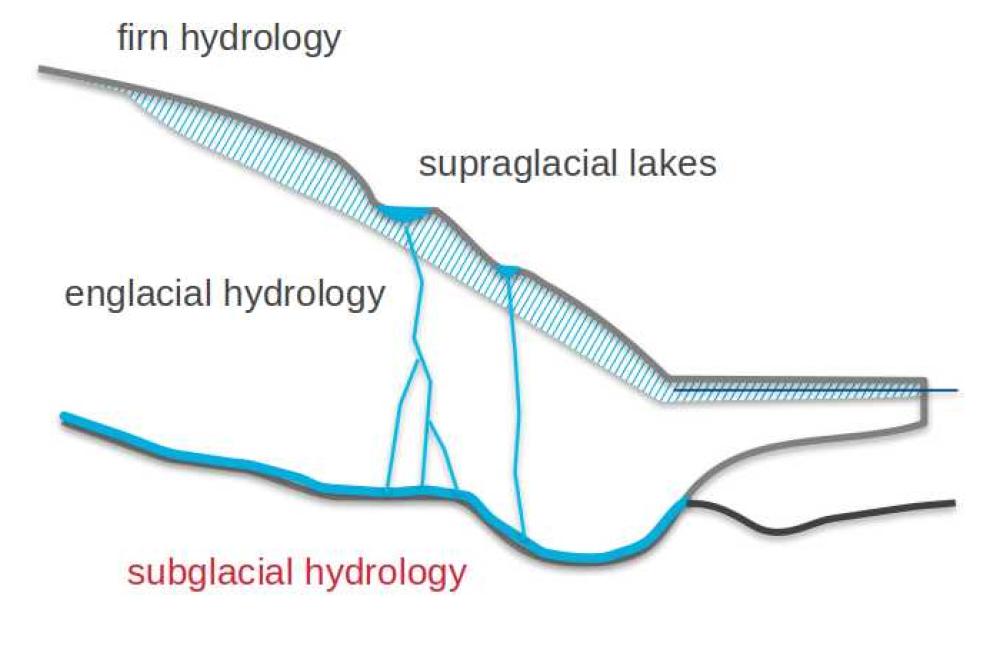

In the context of earth system modeling the simulation of ice-sheets is an important aspect. Ice-sheet modeling contains ...

Organ-like three-dimensional cell aggregates developed in the laboratory represent versatile cellular models for drug ...

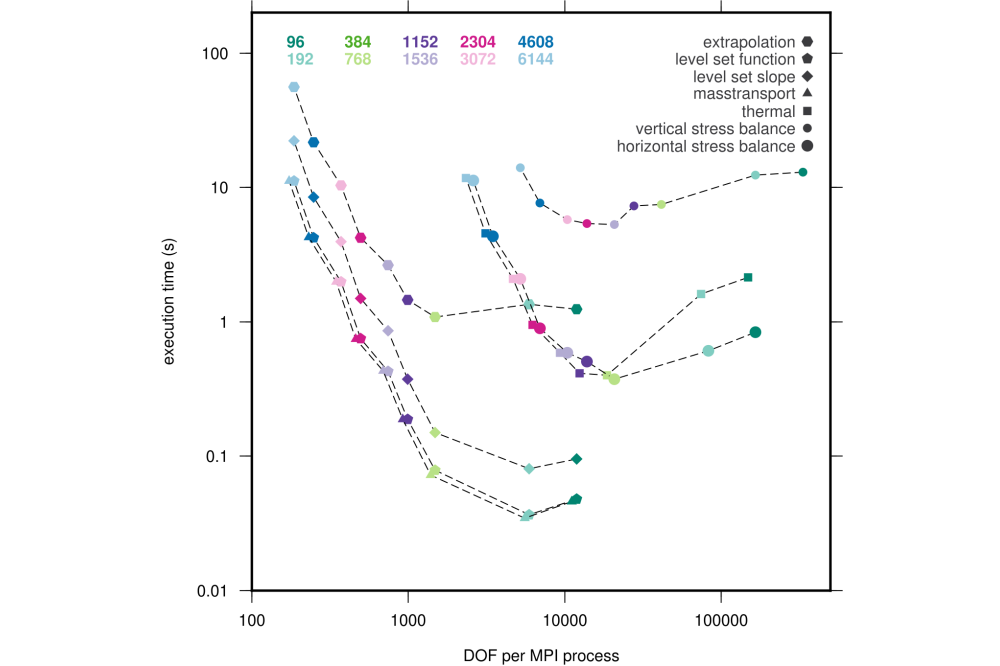

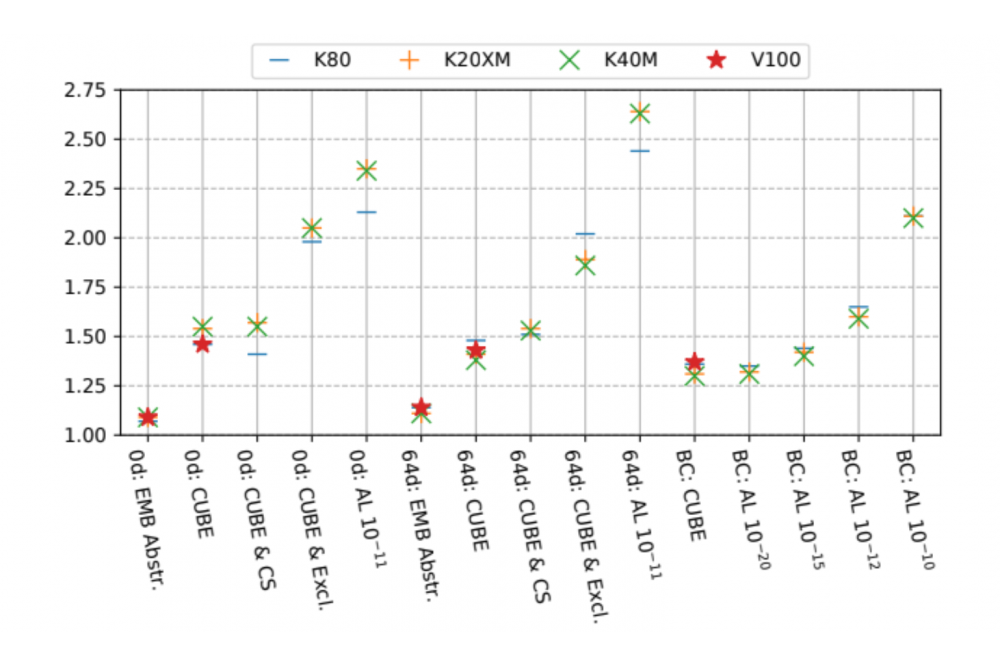

Empirical performance modeling is a proven instrument to analyze the scaling behavior of HPC applications. Using a set ...

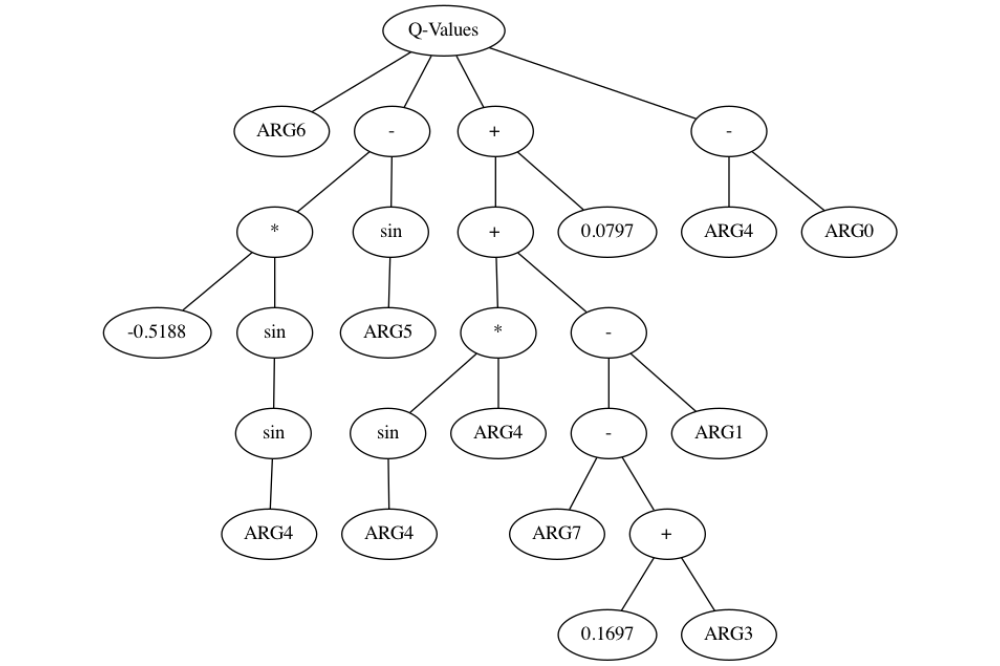

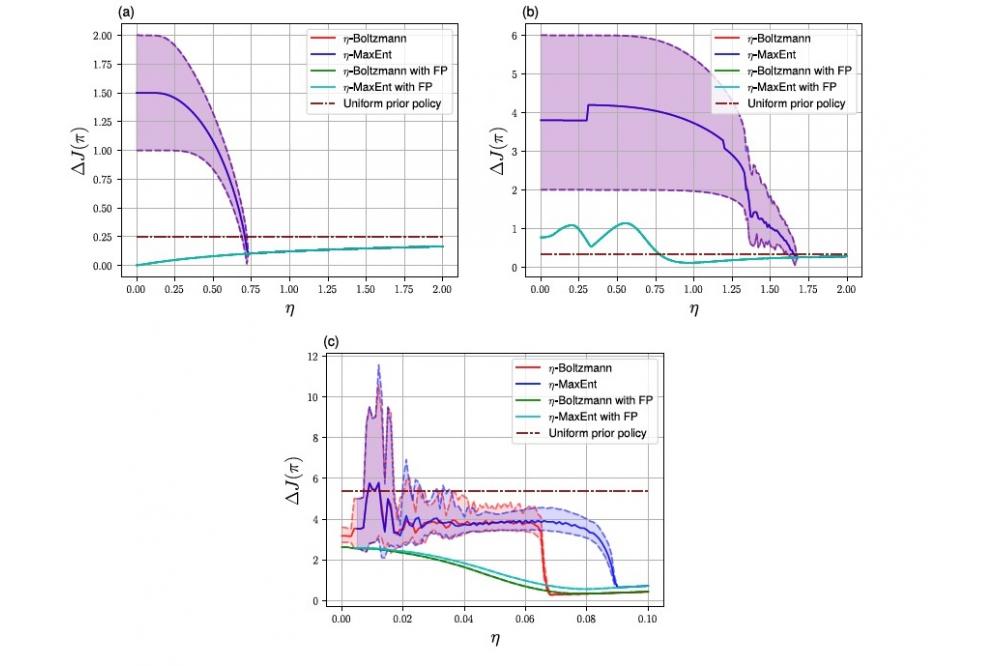

Reinforcement Learning (RL) has proven to be an empirically very successful approach to solving sequential decision ...

Recurrent State-space models (RSSMs) are highly expressive models for learning patterns in time series data and system ...

This project aimed at solving multi-label classification problems using rule learning algorithms. Multi-label ...

The bursty nature of network traffic is one of the main reasons for congestion in data centers, since traffic loads are ...

Identifying scalability bugs in parallel applications is a vital but also laborious and expensive task. Empirical ...

Stochastic-search algorithms are problem independent algorithms well-suited for black-box optimization of an objective ...

Spacecraft missions of the European Space Agency (ESA) are required to meet specific probabilistic requirements ...

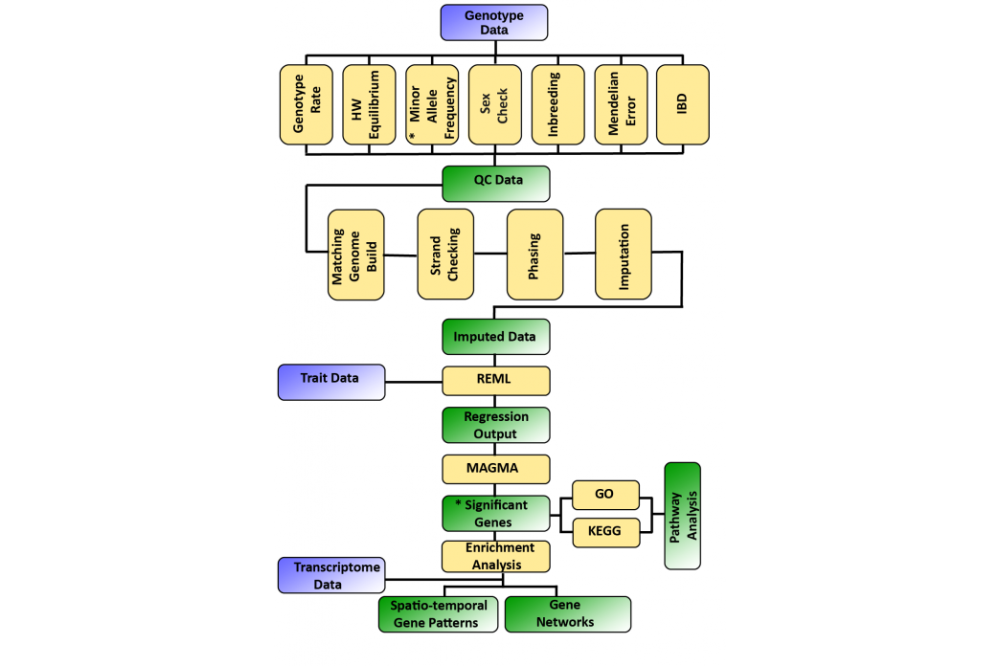

The complex architecture of neuropsychiatric disorders comprises of distinct neuropsychological traits. Previous studies ...