End-to-end Learning for Multi-Embodiement Locomotion

Einleitung

The rapid development of robotics has enabled legged robots capable of performing highly dynamic tasks such as walking on uneven terrain, jumping, or even completing challenging parkour courses. However, training robots of different morphologies, such as quadrupeds, bipeds, and hexapods, still is a significant challenge for current training paradigms. To overcome these challenges, scalable Deep Reinforcement Learning algorithms and parallelized simulations significantly improve the learning for such robots when coupled with clever neural network policy architectures.

Methoden

In this research, a novel framework called URMA (Unified Robot Morphology Architecture) is used to create a single neural network architecture capable of learning control policies for various robot morphologies. The approach involves training the robots in a simulation environment, where multiple robots are trained simultaneously. Our method uses a combination of DRL and a morphology-aware neural network architecture to allow robots to share knowledge between their embodiements. The framework is designed to be morphology-agnostic, meaning that it can be applied to any robot with any number of joints. Key to our approach is the use of special encoders and decoders that translate the robot’s specific joint and sensor information into a shared representation space. This enables the model to generalize its learning and control a wide range of robots.

Ergebnisse

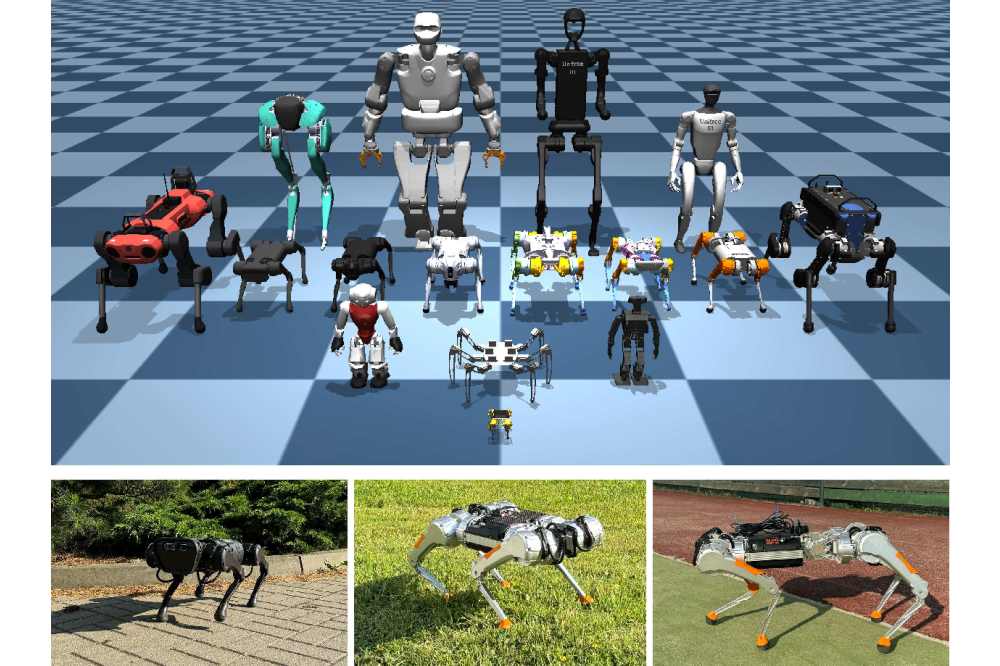

In the first phase of the project, we successfully trained a locomotion policy on 16 different robot platforms, including quadrupeds, hexapods, and humanoids. The trained policy was able to control these robots both in simulation and also on selected real-world environments. For example, zero-shot transfer of the trained policy to the MAB Silver Badger quadruped robot in the real world was achieved without any further fine-tuning, demonstrating the framework’s robustness and adaptability. A significant achievement in the later stages was the successful zero-shot and few-shot transfer of the trained policy to robots not seen during trainingt. The framework also demonstrated an ability to handle missing sensor data and adapt to changes in robot morphology. These results highlight the potential of the URMA framework to serve as a foundation model for legged locomotion, enabling rapid deployment across a wide variety of robot platforms.

Diskussion

The results of our project demonstrate the effectiveness of the URMA framework in learning robust control policies for different types of robots. The use of HPC was crucial in achieving these results, as it enabled the parallel simulation and training of multiple robot platforms, reducing the overall training time. The ability of the framework to transfer learned policies to new robots without additional training underscores its potential for practical applications in robotics.

However, challenges remain, particularly in generalizing to highly diverse or completely new robot morphologies. While the framework shows strong performance on quadrupeds and humanoids, more work is needed to handle extreme variations in robot design or to improve the fine-tuning process for entirely new platforms. Future research will focus on extending the framework’s capabilities to more complex locomotion tasks, such as highly dynamic locomotion in challenging terrain and integrating advanced sensing capabilities.