Robot Skill Learning

Einleitung

The project investigated probabilistic motor skill learning for simulated robots. In detail the project looked into the online adaptation mechanisms and transfer learning for planning. More concretely, the robot has to use an initial skill, which he previously learned, and needs to adapt this skill to generalize to slightly different settings. Therefore, the robot learns the transfer most efficiently and can easily extend its capabilities. As robot learning requires many trial and error of the robot, the experiments need to be parallelized to gather sufficient many samples to identify good solutions. Therefore, we used the Lichtenberg Cluster to simulate our agents in parallel and test different hyperparameter.

Methoden

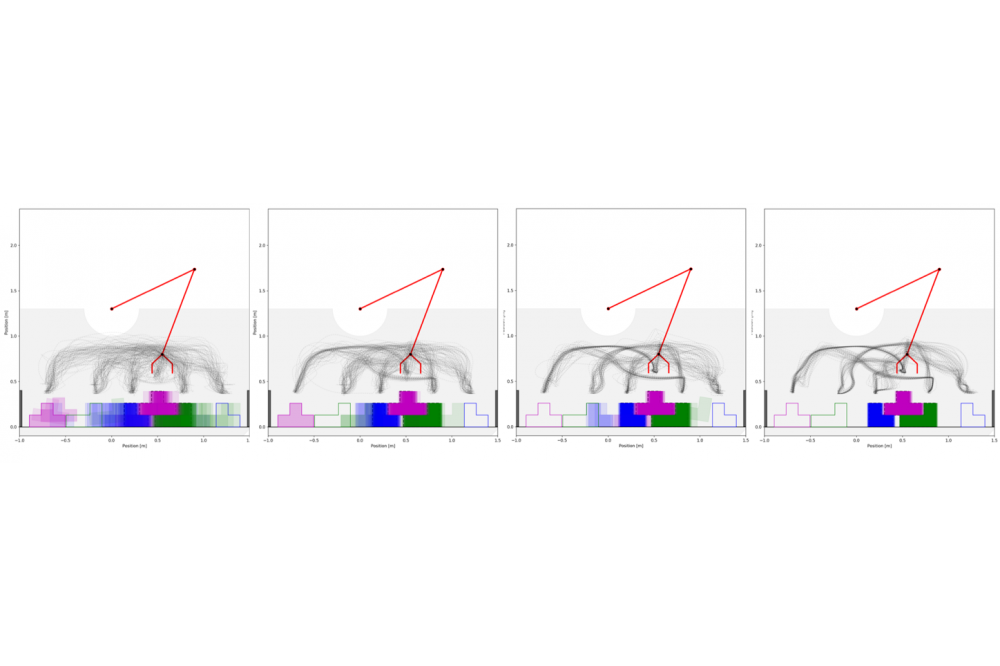

During the project we used the reinforcement learning algorithm Relative Entropy Search (REPS) for searching good movement representations, which were modelled using Probabilistic Movement Primitives (ProMP). The ProMP models the movement as a distribution over parameters and REPS searches for the optimal parameter distribution to achieve maximum reward. Therefore, this algorithm samples different parameters of the ProMP and tries these parameters on the robot. Afterwards, the sampled parameters are weighted using the achieved reward and then used to update the distribution. To make sure that two consecutive policies do not diverge, the distance between two consecutive distributions is limited using the Kullback-Leibler divergence.

Ergebnisse

The project showed that one can learn to transfer movement primitives to slightly different tasks. However, the project only evaluated a small toy example using a simulated two degree of freedom robot. Therefore, future work must evaluate whether this transfer can be extended to higher dimension and sequential tasks.